Analyzing images using Azure Cognitive Services

Computer Vision API of Azure Cognitive Services can be used to analyze and describe images. It’s rich API that supports also faces and landmarks recognition, not to mention automatic describing and tagging of images. This blog post shows how to use image analyzing features of Azure Cognitive Services.

Source code available! This blog post is based on ASP.NET MVC demo application available at GitHub repository gpeipman/CognitiveServicesDemo. Those who want to try it out need Azure account and few cognitive services accounts. Free tiers of those services are enough to try my solution out.

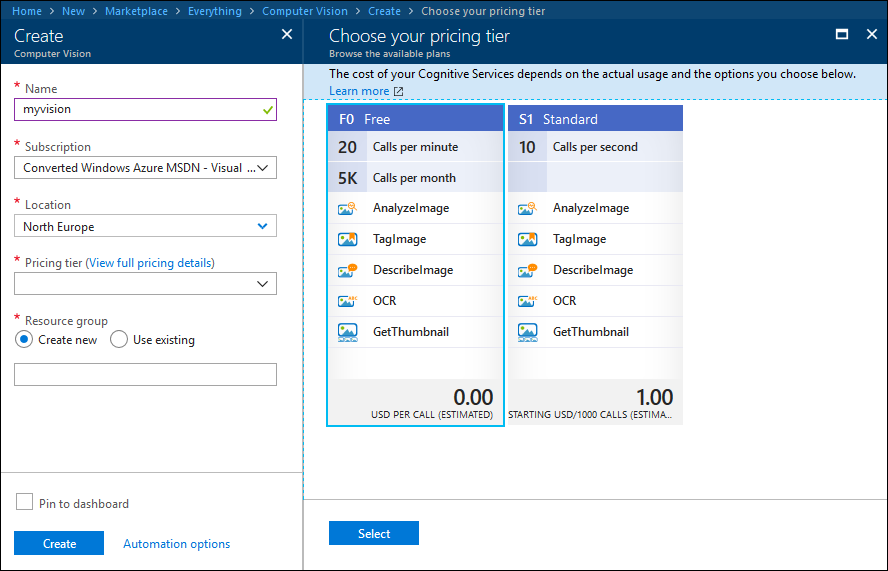

Creating Azure Computer Vision account

To use Computer Vision API we need to create a Computer Vision account on Microsoft Azure. Free account gives us same services as paid one but we can only analyze 20 images per minute. For testing the service it is more than enough.

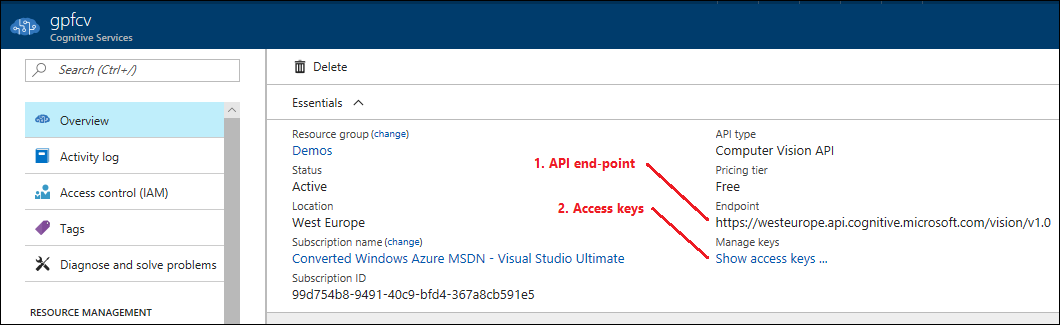

Configuring application

Before running demo application it needs configuring. There are two settings: API endpoint address and access keys. These are available at Azure Portal after creating service account.

First step is to create keys.config file in application root directory and define API key there.

<appSettings>

<add key="CognitiveServicesVisionApiKey" value="Your API key here" />

</appSettings>

Also make sure that CognitiveServicesVisionApiUrl in web.config file is same as with account created before.

Analyzing images using Computer Vision API

To analyze images we need instance of Azure Computer Vision API client.

var apiKey = ConfigurationManager.AppSettings["CognitiveServicesVisionApiKey"];

var apiRoot = ConfigurationManager.AppSettings["CognitiveServicesVisionApiUrl"];

VisionServiceClient = new VisionServiceClient(apiKey, apiRoot);

I write simple controller action to analyze images.

public async Task<ActionResult> Index()

{

if (Request.HttpMethod == "GET")

{

return View("Index");

}

var model = new DescribeImageModel();

// What features to find on imahe

var features = new[]

{

VisualFeature.Adult, VisualFeature.Categories, VisualFeature.Color, VisualFeature.Description,

VisualFeature.Faces, VisualFeature.ImageType, VisualFeature.Tags

};

// Analyze image using copy of input stream

await RunOperationOnImage(async stream =>

{

model.Result = await VisionServiceClient.AnalyzeImageAsync(stream, features);

});

// Convert image to base64 image string

await RunOperationOnImage(async stream => {

var bytes = new byte[stream.Length];

await stream.ReadAsync(bytes, 0, bytes.Length);

var base64 = Convert.ToBase64String(bytes);

model.ImageDump = String.Format("data:image/png;base64,{0}", base64);

});

return View(model);

}

Here it what it does:

- Return immediately if it is GET request (user just came to page)

- Create array of features to find from image

- Analyze image using copy of request stream because API calls will dispose input stream

- Create base64 image string

- Return filled model to view

Here is the view for Index action.

@model DescribeImageModel

@{

ViewBag.Title = "Describe image";

}

<h2>Describe image</h2>

@Html.Partial("_Upload")

@if (Model != null && !string.IsNullOrEmpty(Model.ImageDump))

{

<img src="@Model.ImageDump" width="600" />

<h3>Description</h3>

<table class="table-bordered">

<tr>

<th>Adult content</th>

<td>@Model.Result.Adult.IsAdultContent</td>

</tr>

<tr>

<th>Racy content</th>

<td>@Model.Result.Adult.IsRacyContent</td>

</tr>

<tr>

<th>Categories</th>

<td>@string.Join(", ", Model.Result.Categories.Select(c => c.Name + " (" + c.Detail + ")").ToArray())</td>

</tr>

<tr>

<th>Accent color</th>

<td>@Model.Result.Color.AccentColor</td>

</tr>

<tr>

<th>Dominant background color</th>

<td>@Model.Result.Color.DominantColorBackground</td>

</tr>

<tr>

<th>Dominant foreground color</th>

<td>@Model.Result.Color.DominantColorForeground</td>

</tr>

<tr>

<th>Dominant colors</th>

<td>@string.Join(", ", Model.Result.Color.DominantColors)</td>

</tr>

<tr>

<th>Black-white</th>

<td>@Model.Result.Color.IsBWImg</td>

</tr>

<tr>

<th>Description</th>

<td>

@foreach(var cap in Model.Result.Description.Captions)

{

<text>@cap.Text <br /></text>

}

</td>

</tr>

<tr>

<th>Clip art type</th>

<td>@Model.Result.ImageType.ClipArtType</td>

</tr>

<tr>

<th>Line drawing type</th>

<td>@Model.Result.ImageType.LineDrawingType</td>

</tr>

<tr>

<th>Metadata</th>

<td>@Model.Result.Metadata.Format (@Model.Result.Metadata.Width x @Model.Result.Metadata.Height)</td>

</tr>

<tr>

<th>Tags</th>

<td>@string.Join(", ", Model.Result.Tags.Select(t => t.Name).ToArray())</td>

</tr>

</table>

}

With all important work done let’s try to analyze some images.

Trying out Computer Vision API

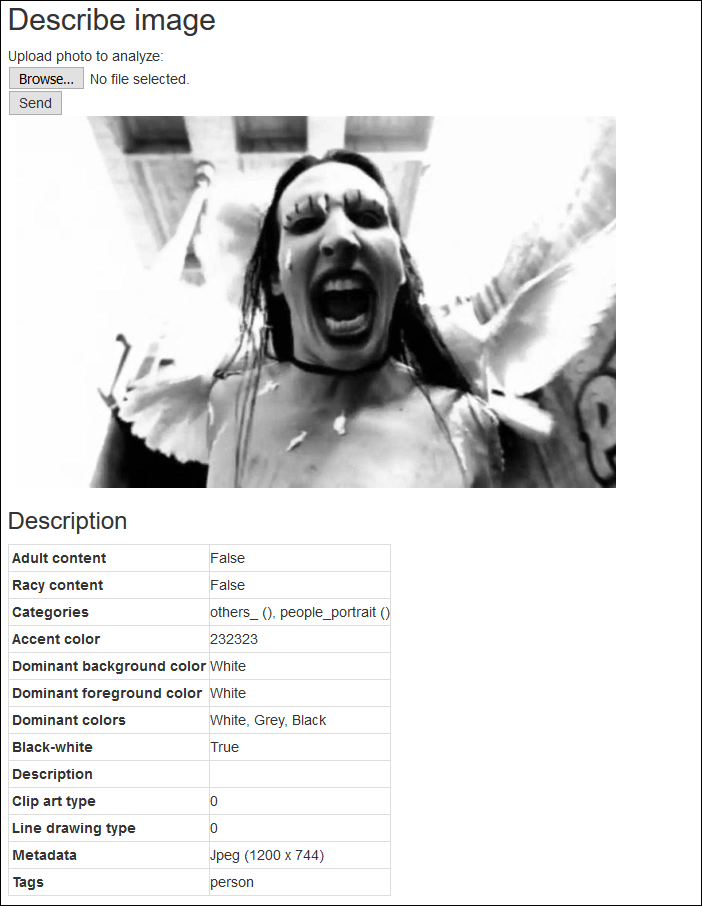

Let’s try what is Computer Vision API doing with different photos. Let’s start with nice photo of Marilyn Manson.

Although we have celebrity on photo it was not recognized. Also notice that description is missing and Computer Vision API provided us only with one tag: Person.

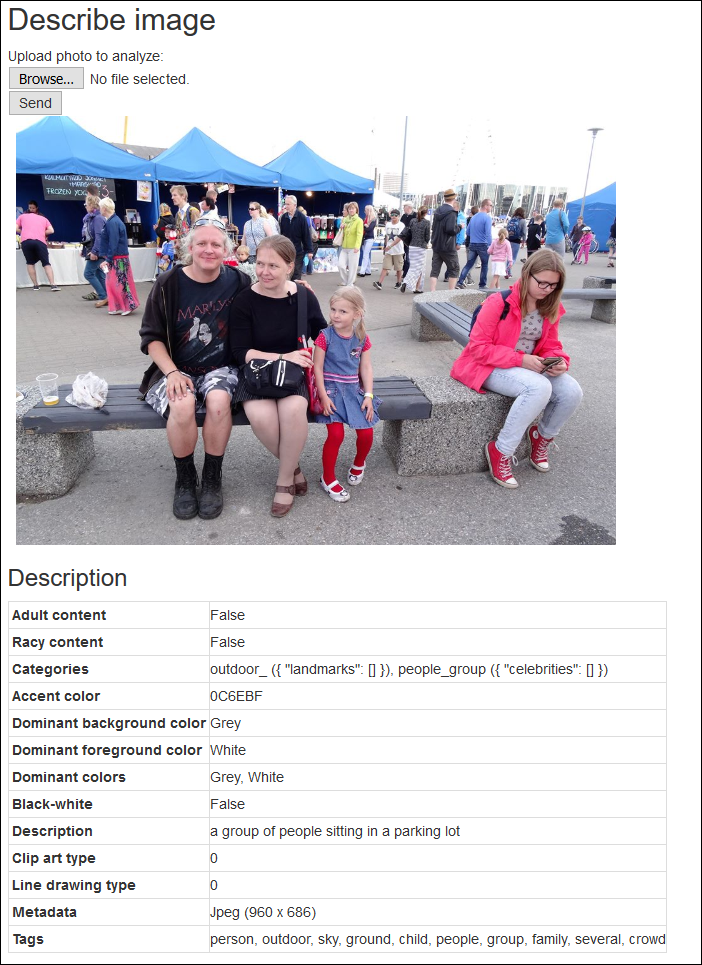

Let’s try now with my family. Face API of Computer Vision services is able to recognize all of us.

Now things got a little bit better. Service detected that we are outdoors and the place where we sat was probably parking lot. Also we have now more tags.

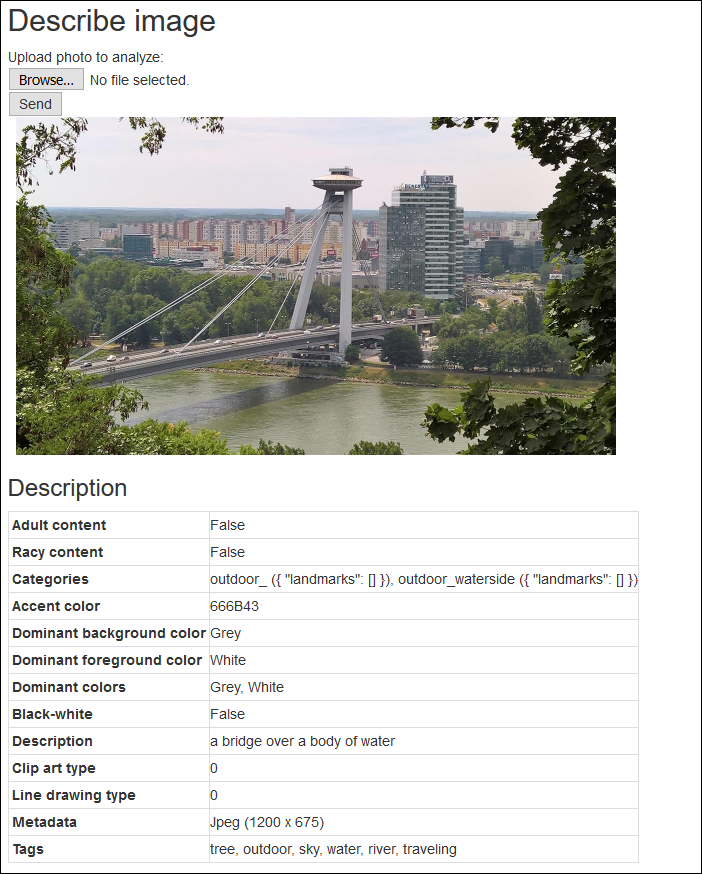

Next thing to analyze is famour UFO bridge in a beautiful city of Bratislava.

Categories, tags and description are okay but the famous landmark is not recognized. Oh, God, why?

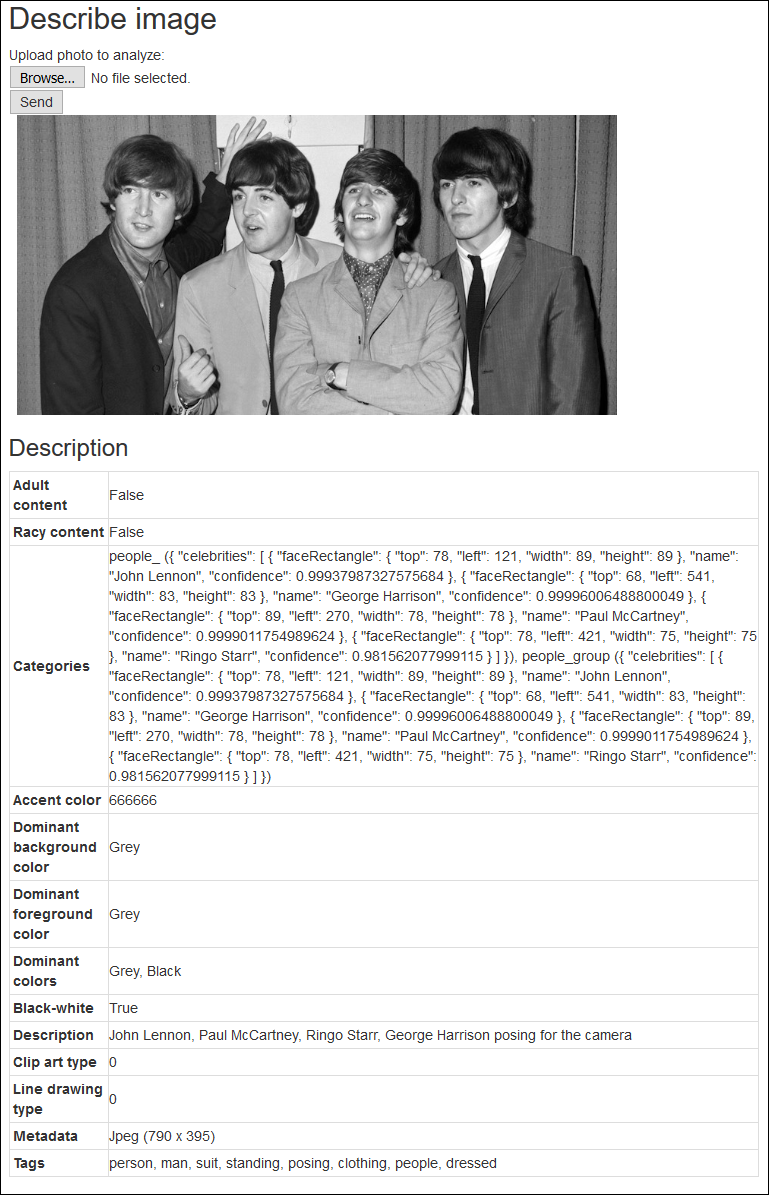

Finally I tried with perhaps the most famous band in the world – The Beatles.

Now things got way better. Notice that all guys on photo were detected with face rectangle. Description seems also okay. But tags seem weird to me.

So, here are the results:

- Recognizing people defined in Face API person groups is not (yet?) supported

- Not all celebrities and landmarks are recognized

- Descriptions are sometimes okay and sometimes not very straight to point

- Tags work okay but some of these doesn’t make sense in most cases

But still I’m okay with the results we got and I hope that the service is improved over time.

Wrapping up

Analyzing photos with Computer Vision API is simple and fun. There is free service tier available and in most cases we got pretty good results. As the service is still young in the means of data sets then not all celebrities and famous landmarks are recognized. I hope that more celebrities and landmarks will be added to service soon.

Pingback:Dew Drop - May 29, 2018 (#2734) - Morning Dew

Pingback:The Morning Brew - Chris Alcock » The Morning Brew #2594