Detecting faces on photos using Microsoft Cognitive Services

I started playing with Microsoft Cognitive Services and discovered that it makes many otherwise complex tasks very easy. This blog post shows how to build web application that detects faces on uploaded photo.

Cognitive services account. You need Microsoft Cognitive Services account before you can use services. Only thing you need is Microsoft Account or Office 365 account. After authentication you need to acquire service key and wait some moments while it is generated and ready to use. Most of services can be used for free in small scale.

Building cognitive services client

To use cognitive services API-s we need two settings we will keep in web.config file:

- CognitiveServicesKey – this is the key we get when joining with cognitive services

- CognitiveServicesApiUrl – API end-point, by example: https://westus.api.cognitive.microsoft.com

Sample appication is available in my Github repository CognitiveServicesDemo. Be warned that code of this application is very raw and experimental right now but I will improve it over time.

Client class with call to face detection service is here. It reads settings from web application config files, builds URL and uploads image to analyze to cognitive services API.

public static class CognitiveServicesClient

{

private static readonly string ApiKey = ConfigurationManager.AppSettings["CognitiveServicesKey"];

private static readonly string ApiUrl = ConfigurationManager.AppSettings["CognitiveServicesApiUrl"];

public static async Task<List<DetectedFace>> DetectFaces(Stream image)

{

using (var client = new HttpClient())

{

var content = new StreamContent(image);

var url = ApiUrl + "/face/v1.0/detect?returnFaceId=true&returnFaceLandmarks=true";

client.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key", ApiKey);

content.Headers.ContentType = new MediaTypeHeaderValue("application/octet-stream");

var httpResponse = await client.PostAsync(url, content);

if (httpResponse.StatusCode == HttpStatusCode.OK)

{

var responseBody = await httpResponse.Content.ReadAsStringAsync();

return JsonConvert.DeserializeObject<List<DetectedFace>>(responseBody);

}

}

return null;

}

}

The code doesn’t build until we add DTO classes. First, we need some class to represent points in double or float format.

public class FacePoint

{

public double X;

public double Y;

}

From API we get back also a special object that describes different points in face found by API. Cognitive services use term face landmarksfor these points.

public class FaceLandmarks

{

public FacePoint PupilLeft;

public FacePoint PupilRight;

public FacePoint NoseTip;

public FacePoint MouthLeft;

public FacePoint MouthRight;

public FacePoint EyebrowLeftOuter;

public FacePoint EyebrowLeftInner;

public FacePoint EyeLeftOuter;

public FacePoint EyeLeftTop;

public FacePoint EyeLeftBottom;

public FacePoint EyeLeftInner;

public FacePoint EyebrowRightInner;

public FacePoint EyebrowRightOuter;

public FacePoint EyeRightInner;

public FacePoint EyeRightTop;

public FacePoint EyeRightBottom;

public FacePoint EyeRightOuter;

public FacePoint NoseRootLeft;

public FacePoint NoseRootRight;

public FacePoint NoseLeftAlarTop;

public FacePoint NoseRightAlarTop;

public FacePoint NoseLeftAlarOutTip;

public FacePoint NoseRightAlarOutTip;

public FacePoint UpperLipTop;

public FacePoint UpperLipBottom;

public FacePoint UnderLipTop;

public FacePoint UnderLipBottom;

}

We get also back rectangle around the face. This is called face rectangle in cognitive services.

public class FaceRectangle

{

public int Left;

public int Top;

public int Width;

public int Height;

public Rectangle ToRectangle()

{

return new Rectangle(Left, Top, Width, Height);

}

}

I added here ToRectangle() method that converts face rectangle to rectangle used by System.Drawing classes. We need it later. As API returns the list of faces detected from photo we need one general DTO to keep everything about face together.

public class DetectedFace

{

public string FaceId;

public FaceRectangle FaceRectangle;

public FaceLandmarks FaceLandmarks;

}

No we have all classes needed to communicate with face detection service and it’s time to build UI for service client.

Building face detection web page

I created simple ASP.NET MVC 5 application as there we have stable support for processing images. Our face detection page works as follows:

- show photo upload form and submit button

- if photo was uploaded then analyze it

- if there were faces on photo then draw rectangles around faces

- return photo as image string

- show photo on page

Here is the DetectFaces method of HomeController.

public async Task<ActionResult> DetectFaces()

{

if(Request.HttpMethod == "GET")

{

return View();

}

var imageResult = "";

var file = Request.Files[0];

IList<DetectedFace> faces = null;

// will be actually disposed by stream content

using (var analyzeCopyBuffer = new MemoryStream())

{

file.InputStream.CopyTo(analyzeCopyBuffer);

file.InputStream.Seek(0, SeekOrigin.Begin);

analyzeCopyBuffer.Seek(0, SeekOrigin.Begin);

faces = await CognitiveServicesClient.DetectFaces(analyzeCopyBuffer);

}

using (var img = new Bitmap(file.InputStream))

// make copy, drawing on indexed pixel format image is not supported

using (var nonIndexedImg = new Bitmap(img.Width, img.Height))

using (var g = Graphics.FromImage(nonIndexedImg))

using (var mem = new MemoryStream())

{

g.DrawImage(img, 0, 0, img.Width, img.Height);

var pen = new Pen(Color.Red, 5);

foreach (var face in faces)

{

var rectangle = face.FaceRectangle.ToRectangle();

g.DrawRectangle(pen, rectangle);

}

nonIndexedImg.Save(mem, ImageFormat.Png);

var base64 = Convert.ToBase64String(mem.ToArray());

imageResult = String.Format("data:image/png;base64,{0}", base64);

}

return View((object)imageResult);

}

I had to add some nasty hacks here as for some reason StreamContent class used in cognitive services client automatically disposes input stream. Before we process the image we will make copy of it. This is because drawing on photos that have indexed picel format is not supported by System.Drawing. In the end we convert the image to inline string we can use with img tag.

We also need a view with upload form and image.

@model string

@{

ViewBag.Title = "DetectFace";

}

<h2>DetectFace</h2>

<form method="post" enctype="multipart/form-data">

Upload photo to analyze: <br />

<input type="file" name="ImageToAnalyze" /><button type="submit">Send</button>

</form>

@if(Model != null)

{

<img src="@Model" />

}

Now we are done with web application and it’s time to try it out.

Detecting faces

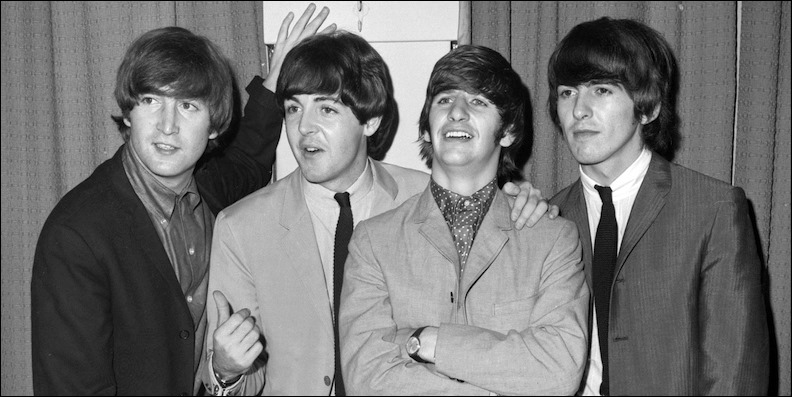

To try web application out I took The Beatles photo from Google search and the source of photo is The Beatles page at Pitchfork.

Let’s run web application and move to DetectFaces page. Then let’s upload The Beatles photo, click send and see what happens.

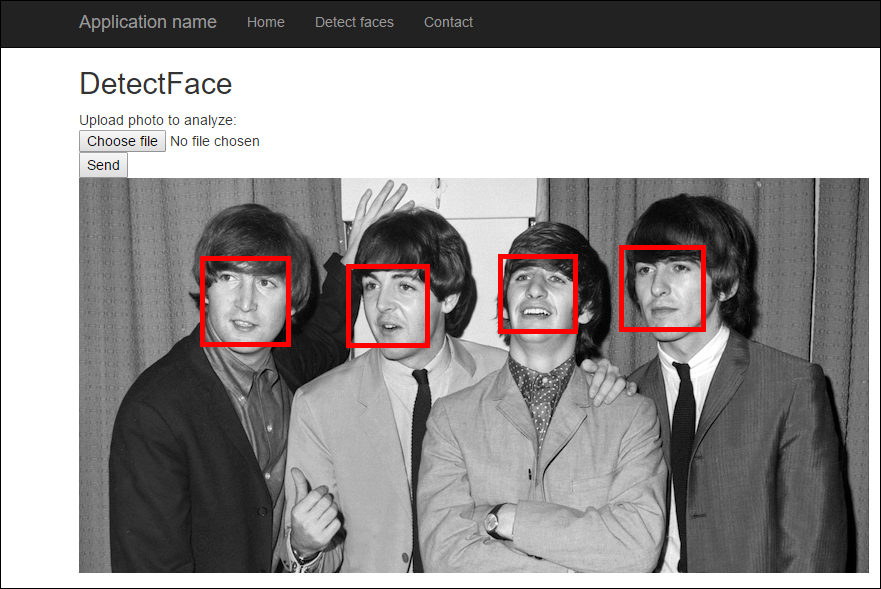

My result is here:

If keys are working, API end-point URL is correct and we have not exceeded out daily limit we get back photo where there are rectangles around all detected faces.

Wrapping up

Face detection and other image analyzing solutions sound like something big, resource intensive and complex at first place. Using Microsoft Cognitive Services that run on Azure cloud wipes all these complexities away from us. We have to write some client code and visualize the results. That’s it. The big work is done in Azure servers, we just consume these services over simple web API. Our web application that seems sort of intelligent is actually a simple web based UI that works as mediator between user and cognitive services.

References

- Microsoft Cognitive Services (Official homepage)

- Face API (Official documentation)

- Cognitive services demo application (Gunnar Peipman)

Pingback:Compelling Sunday – 20 Posts on Programming and QA

Hi Gunnar Peipman

Hope you are doing good.

I’d like to know about FaceApi image storage on the cloud.My concern is how did I identify the uploaded images in Azure, Does it located on a specific path somewhere.

Though in AWS we can see uploaded images easly.

Kindly share your valuable thoughts

Hi Sachin,

Cognitive services actually don’t seem to hold copies of images. AFAIK they just detect faces with all important points and save this meta data. Keeping up the link between image file and data in cognitive services is up to developers.

Hi,

I downloaded your codes from Github! it works wonder. But when I try to copy your code and do from scratch I am having errors like reference/assembly runtime. which I failed to solve it.

Do you have a project on how to create a person group and person for face API in MVC web application?

Sample application supports managing person groups and people too. Just select Faces from top menu and Person groups from left menu.

Hi! I am wondering if I did not do the train function will it still be able to identify the individual?

Is it possible to create a person and add it to my local DB too? Please advise me. I know it is not necessary but I wish to store it in my own DB just for reference purpose.

Markson, it is possible it identifies but identification is probably not so accurate.

Alison, you should use the ID for person you are using with cognitive services. There is also additional data field available where you can keep the ID of person you are using in your database. You can make it work both ways but I would prefer using ID by cognitive services.

Hi Gunnar,

Thank you for your reply! I wish to use the userdata field as that in my local db schema i set it as a primary key as i want the userdata to be unique like a ID. so now i have created a DB but i do not know how to add the data into my DB once the “person” is successful created using the cognitive service

Hi Alison,

I think I create small sample application to show how to keep link between local database and cognitive services face detection. I doesn’t happen over next hours but over next few days, I hope.

What a great job you did with this Gunnar.

Thank you for all the hard work. A very useful utility to add new people and to add photos to existing people. What a great tool!

Only problem I had was finding the “web application root folder” to add the keys file. However, I was able to add the two “add key” lines to the “Web config” file and that worked fine.

Thanks again!

Frank

One other issue I noted thus far is the “Delete Group” feature does not function.

May i know where to get the small sample applcation? Thank you

I will write here as soon as it’s done. Meanwhile you can check out my demo application that demonstrates how to use cognitive services: https://github.com/gpeipman/CognitiveServicesDemo

Hello Gunnar,

I would like to show this real-time video face identification, I trained the image and recognized it well, but how to demonstrate with the Microsoft example https://github.com/Microsoft/Cognitive-Samples-VideoFrameAnalysis

sorry for English

Hi Marco,

I don’t have answer right now. I will take a look at this example later.