IFileClient – Generalizing storage access in ASP.NET web applications

This is one of the examples from my presentation about how to port existing ASP.NET applications to Windows Azure and how to build hybrid applications that work in multiple environments. One of important aspects is file storage that may have multiple implementations for different technical environments. In this posting I will introduce you my idea about generalized file access in web applications.

Difference between classic hosting and cloud

Web applications that are hosted on regular environments like shared or dedicated hosting or on some in-premises server usually handle files directly through disk access classes.

On Windows Azure compute roles we can also save files to local disk but these files are deleted with compute role. Deleting and creating new instances of role is something that Windows Azure does automatically and transparently. On Windows Azure we should use for files some external storage like Windows Azure blob storage or some competing solution.

Hybrid mode

You may also find yourself in situation where you host your application in-premises but you keep files on blob storage for some reason. By example, you may need this kind of solution when you offer direct file downloads for big number of users. You don’t want to buy more servers or disk arrays and manage them. Instead you take Windows Azure blob storage because it scales highly.

Now, without changing your application, you want it suddenly to support totally different storage than before. Migrating files can be automated but you don’t cannot automate code modifications if file access classes are used everywhere in your code.

Move file access to interface

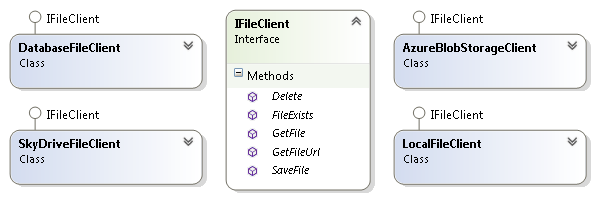

To make our application not aware of storage details we have to move storage logic to somewhere else and hide it behind some interface that all storage classes can implement. Image below shows what we are up to.

IFileClient is the interface that defines file system operations that our application needs. GetFileUrl() method may seem weird when implementing local file access but it is really useful when we provide direct downloads from server disk or Windows Azure blob storage.

public interface IFileClient

{

void SaveFile(string storeName, string fileName, byte[] file);

byte[] GetFile(string storeName, string fileName);

void Delete(string storeName, string fileName);

bool FileExists(string storeName, string fileName);

string GetFileUrl(string storeName, string fileName);

}

What is store name?

This was restriction I was forced to make because on Windows Azure blob storage we have no support for subfolders. We have storage account. Under storage account we have containers that contain files. This leads us to directory structure with one level of folders.

It is possible to mimic folder hierarchy on blob storage by special naming of containers and in practice it works well – tried and proven – but still I’m not very happy with this solution. Something with this solution is annoying me but I’m not sure yet what it is. But it is still possible and it works.

In local machine we have folder that is considered as content root. Under content root we have folders that represent containers and these folders contain files.

Example: LocalFileClient

As an example of IFileClient I show you simple implementation of file client that is intended to use with local drives.

public class LocalFileClient : IFileClient

{

private string _fileRoot;

public LocalFileClient()

{

_fileRoot = ConfigurationManager.AppSettings["ContentRoot"];

}

public void SaveFile(string storeName, string fileName, byte[] file)

{

string path = Path.Combine(_fileRoot, storeName, fileName);

if (File.Exists(path))

File.Delete(path);

Directory.CreateDirectory(path);

File.WriteAllBytes(path, file);

}

public byte[] GetFile(string storeName, string fileName)

{

if (FileExists(storeName, fileName))

{

var path = Path.Combine(_fileRoot, storeName, fileName);

return File.ReadAllBytes(path);

}

return null;

}

public void Delete(string storeName, string fileName)

{

var path = Path.Combine(_fileRoot, storeName, fileName);

File.Delete(path);

}

public bool FileExists(string storeName, string fileName)

{

var path = Path.Combine(_fileRoot, storeName, fileName);

return File.Exists(path);

}

public string GetFileUrl(string storeName, string fileName)

{

throw new NotImplementedException();

}

}

This file client implementation doesn’t offer public URL-s and it is the reponsibility of web application to generate file download URL-s and send out file when some of these URL-s is requested.

Introducing IFileClient to existing application

As applications are different and also architectural considerations depend highly on different aspects of application and business requirements I give here some simple points how to start using this interface.

- Define IFileClient in some library that has no references to application specific components. Well layered applications often have some infrastructure library that defines interfaces and perhaps provides some default implementations of those interfaces. These libraries are good places for IFileClient.

- Use some dependency injection framework (Structuremap, Ninject) to provide correct implementations of interfaces to your web pages or forms.

- Make all file access code use IFileClient implementation you prefer.

- Test your application against all these changes to make sure you introduced no new bugs.

Of course, for many legacy applications this change means a lot of additional work.

Using IFileClient in ASP.NET MVC controller

As people are always interested in how to use one or another piece of code then here is the example of simple ASP.NET MVC controller. This controller lets users manage contracts and download them as PDF-files. Download method checks if contract exists and if contract is available as file then download starts.

public class ContractController : Controller

{

private readonly IFileClient _fileClient;

public ContractController(IFileClient fileClient)

{

_fileClient = fileClient;

}

// ...

public ActionResult Download(int id)

{

var fileName = id + ".pdf";

var file = _fileClient.GetFile("contracts", fileName);

if (file == null)

return HttpNotFound();

var fileResult = new FileContentResult(contractBytes, MediaTypeNames.Application.Pdf);

fileResult.FileDownloadName = fileName;

return fileResult;

}

// ...

}

Wrapping up

To support different file storage systems you are often bound to limitations of most restricted one. As we saw it is easy to write file client interface and inject it to your applications. Also implementations of file client are pretty simple. Although you have to change your application code it is way easier for you to move to some other storage system later if needed.

Can we see what maybe one of the implementations might look like, say for Ninject and Azure Storage?

Since it’s an I/O based API, it might better be represented with an asynchronous (Task) API. This would integrate well with Web API in particular, but also Web Forms and MVC. And is potentially more scalable in IIS.

Also, in a real world app it would be best to support Streams instead of byte[], so the implementation doesn’t have to load the entire BLOB to memory.

Implementation remarks aside, thanks for a very useful post on this concept. Using strategies like this is going to be more and more important, and as you point out, there are unit test advantages too.

Thanks for comment, JT. Using streams or byte arrays (or maybe both of them) is technical decision that depends heavily on what application actually needs. Application that works with small files can use byte arrays. Consuming big BLOB-s can be done also through public containers with direct download links.

I agree that streams and async based interface would be more generic and this way it is easy to write also implementations for Windows Phone and Windows RT.

In the MVC controller example, how is the constructor called withe the right IFileClient by the MVC runtime?

I’m using Structuremap based controller factory. It creates controller asked by MVC and then injects all constructor arguments automatically.

Nice article. Thank you

Pingback:Generalize file access for ASP.NET Core applications using IFileClient implementations

Pingback:Hosting web application on Azure