Experiment: List internals and performance when adding new elements

Lists and their performance has been hot discussion topic for years. I have seen many examples and read a lot of stories about List class and I made conclusion that there is too much pointless opinions about it. In this posting I will show you how List<T> performs when adding elements to it and how it works internally. Be prepared for interesting discussion!

As a first thing I will introduce you how I measured List<T> performance and what results I got. After that we will see how List<T> is internally optimized. There are some changes that happen during optimizations and sometimes people consider these changes as scary ones. I will calm you all down, there is nothing so horrible as some authors say. In the end of the posting I will make some quick conclusions about my experiment and investigations.

My experiment

I wrote for loop that adds 10 million integers to List<int>. I made eight experiments with different initial List<T> capacity to see how initial capacity affects performance. In all of my tests I made sure I don’t make mistakes I described in my previous posting Common mistakes made when measuring the speed of code. Here is the example of my code I used to measure List<T> performance.

static void Main()

{

var watch = new Stopwatch();

var cycles = Math.Pow(10, 2);

var elements = Math.Pow(10, 7);

var listCapacity = 10000000; // (int)elements;

var times = 0D;

for (var cycle = 0; cycle < cycles; cycle++)

{

var inputList = new List<int>(listCapacity);

watch.Reset();

watch.Start();

for (var i = 0; i < elements; i++)

{

inputList.Add(i);

}

watch.Stop();

times += watch.ElapsedMilliseconds;

}

Console.WriteLine("Time: " + times / cycles);

Console.ReadLine();

}

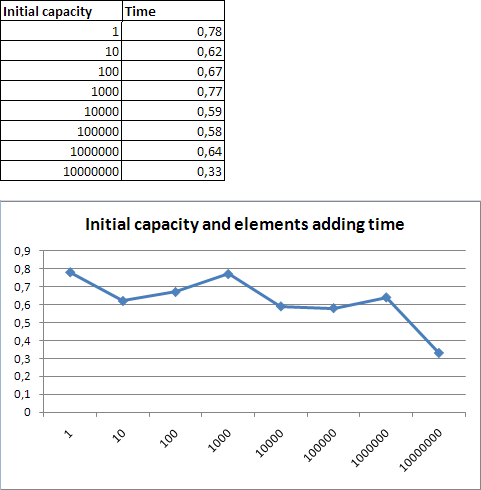

For initial capacity 1 I got 0.78 seconds as average to add 10 million elements to List<T> and for initial capacity 10 million I got 0.33 seconds as average time. So there is two times difference although in time the difference is so small that we don’t recognize it. Here are the results.

As you can see it does not really matter how we choose the initial capacity of List<T>. There seems to the question if these is small initial capacity or there is enough capacity to fill list with elements without any need to resize internal buffers. By default, initial capacity is set to four elements.

How List<T> is optimized?

Source code of .NET Framework is available for everyone and my Visual Studio 2010 is configured to download .NET Framework symbols and source code when I debug my code. The Add() method of List<T> is as follows.

public void Add(T item)

{

if (_size == _items.Length) EnsureCapacity(_size + 1);

_items[_size++] = item;

_version++;

}

Before adding element to internal buffer there is check for capacity. If elements count is reaching the capacity of list then capacity will be increased automatically. Finding new capacity happens in internal method called EnsureCapacity().

private void EnsureCapacity(int min)

{

if (_items.Length < min)

{

int newCapacity;

if (_items.Length == 0)

newCapacity = _defaultCapacity;

else

{

newCapacity = _items.Length * 2;

}

if (newCapacity < min) newCapacity = min;

Capacity = newCapacity;

}

}

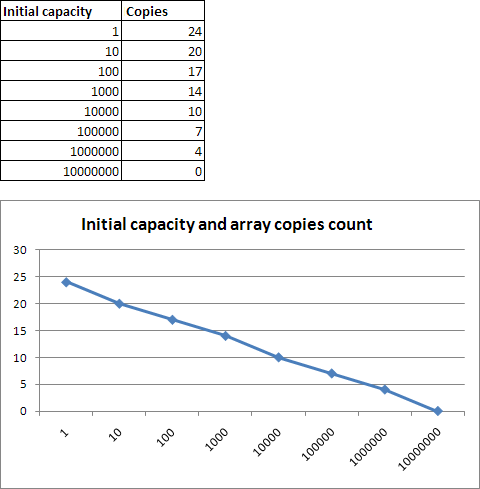

As we can see the new capacity for list is two times bigger than previous capacity. But as we saw from diagram above we don’t win much if we don’t set the capacity to final length of list at first place.

What happens when list capacity changes?

When capacity is increased then something happens in setter of Capacity. In my opinion these operations should be part of EnsureCapacity() and not part of some setter. Here is how internal buffer is resized to match the new capacity.

public int Capacity

{

get

{

return _items.Length;

}

set

{

if (value != _items.Length)

{

if (value > 0)

{

T[] newItems = new T[value];

if (_size > 0)

{

Array.Copy(_items, 0, newItems, 0, _size);

}

_items = newItems;

}

else

{

_items = _emptyArray;

}

}

}

}

We can see that this is the place when blasphemy called array copying happens. Now we know how list capacity is optimized and how internal buffer is increased. Let’s see now how the number of array copies changes with elements count in list.

Comparing this diagram with previous one we have new question – how array copying affects overall performance so lightly? We can practically see correlation only in last part of diagrams. What is going on?

How is implemented resizing of internal array?

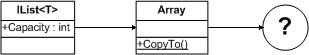

Okay, let’s open Reflector and hunt Array.Copy() as far as we can. Pretty soon our journey ends with call declared like this.

[MethodImpl(MethodImplOptions.InternalCall)]

[ReliabilityContract(Consistency.MayCorruptInstance, Cer.MayFail)]

internal static extern void Copy(Array sourceArray, int sourceIndex,

Array destinationArray,

int destinationIndex,

int length, bool reliable);

This is the final point we can reach. On picture we can show it this way.

The big question is what is happening in this external method. I discovered the answer when I measured performance of different array copying methods. All safe managed code methods gave me pretty similar results. Extremely heavy performance hit came when I moved to unsafe code and pointers.

When I compare performance of Array.Copy() method with my own array copy method then their performance is almost equal. As there is no other way I know that should end up with externals and give extremely fast results I am very sure that internally .NET Framework copies arrays using some technique that I tried out with pointers.

Conclusions

Based on previous discussion I have three conclusions.

- Initial capacity of list affects it’s performance. When we avoided internal buffer resizings we got almost twice better results than with buffer corrections. Although we got performance better it is not so much better that we should work hard to find out final capacities of our lists at any price.

- List<T> is optimized very well. When list capacity is reached then capacity of list is doubled and that leaves room enough to add new elements without internal buffer resizing. For 10 million elements with initial capacity 1 we got only 24 array copy operations.

- Array copy is not a real problem. Array copy in .NET Framework is implemented (expectedly) in unsafe code using pointers. You can try out how fast Array.Copy() works when compared to custom code that copies elements from one array to another. Array copy is extremely fast and it is not bottleneck in List<T> work.

Great post! What about the .NET Framework link? Is not working?

Array.Copy would be just a simple memcpy (or some variation) in C++ for the valid part of the array (the one with values). I believe there is even a special CPU instruction that can be used instead of byte-by-byte copy to speed things up – e.g. by copying 4 bytes at once or something like that.

Don’t forget the final capacity of the list for the different initial capacities:

1: 16777216 (= 25.85 MB wasted)

10: 10485760 (= 1.85 MB wasted)

100: 13107200 (= 11.85 MB wasted)

1000: 16384000 (= 24.35 MB wasted)

10000: 10240000 (= 0.92 MB wasted)

100000: 12800000 (= 10.68 MB wasted)

1000000: 16000000 (= 22.89 MB wasted)

10000000: 10000000 (= 0 bytes wasted)

(NB: Wasted space assumes Int32 = 4 bytes and standard definition of MB = 1048576 bytes.)

Thanks for feedback, RichardD! I really appreciate your feedback. As I mentioned, it is good to know the size of list but it is not always possible. In this case we must clear and destroy list as soon as possible.