SQL Server database backup to Azure Blob Storage

SQL Server supports exporting data-tier applications (BACPAC). It means that database is packaged to one file with schema and data. It’s not same as SQL Server backups but to backup smaller databases it works pretty well. Those who don’t want to mess with local backup storage can use cloud services like Azure Blob Storage to keep database backups. Here’s the example how I automated backup of one not so big database using free tools SqlPackage and AzCopy.

Tools

To make data-tier application or BACPAC style backup of database and upload it to Azure Blob Storage we need two utilities:

- SqlPackage – create BACPAC file of given database.

- AzCopy – command-line utility to manage Azure Blob Storage

To keep things simple and minimalistic I downloaded both utilities as zip-files so I can keep all stuff in one folder. To update utilities I don’t have to uninstall them first – I just download newer zip-files and write files over.

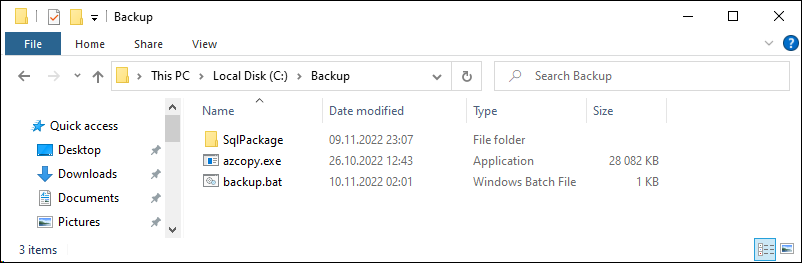

Here’s how I organized files on disk.

As SqlPackage comes with number of files I put it in separate directory so I don’t have any mess in my backup scripts directory.

Backup script

We will use simple DOS batch file to get work done. This is what our backup script will do:

- Define variables for:

- Azure Blob Storage container URL

- Storage container Shared Access Signature (SAS) token. If you don’t know how to generate SAS token then here’s the official guide by Microsoft: Manage blob containers using the Azure portal (a little bit before middle part of page).

- Date based name for backup.

- Database connection string.

- Bump database to local BACPAC file.

- Upload file to Azure Blob Storage container.

- Delete local BACPAC file.

Here’s the script with explaining comments. The only tricky part is getting the date right.

REM Define variables for connection string, storage URL and SAS token

set "CONNSTR=Server=tcp:localhost,1433;Database=db;User ID=X;Password=X;Trusted_Connection=False;Encrypt=False"

set STORAGE=https://my.blob.core.windows.net/container

set "SAS=YOUR_SAS_TOKEN_HERE"

REM Create date based file name

REM ~6,4 means from sixth character take next four

REM Correct date parts depend on date format in your system

set CUR_YYYY=%date:~6,4%

set CUR_MM=%date:~3,2%

set CUR_DD=%date:~0,2%

set FILENAME=%CUR_YYYY%%CUR_MM%%CUR_DD%.bacpac

REM Bump database to BACPAC file

SqlPackage\sqlpackage.exe /TargetFile:%FILENAME% /Action:Export /SourceConnectionString:%CONNSTR%

REM Copy BACPAC to Azure Blob Storage

Azcopy copy %FILENAME% "%STORAGE%?%SAS%"

REM Delete local BACPAC file

del %FILENAME% /Q

You can take this script with copy and paste, replace values and make it run before heading to next step.

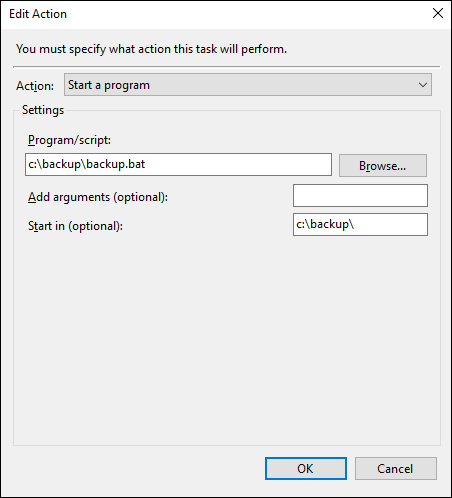

Backup task on Task Scheduler

We can leave this our script on disk and run it manually but usually we want to run it automatically. One of the most classic options is Windows Task Scheduler. If you know what is SQL Server and what is Windows Task Scheduler you probably don’t need tutorial for scheduled tasks.

There’s one important thing. Make sure you set directory for script. It’s Start in field on the following dialog.

Now it’s time to run the script as scheduled task and make sure it runs to the glory end without any errors.

Wrapping up

We automed MSSQL backups using SqlPackage and AzCopy. Our backups are running on Windows Task Scheduler as nightly batch. I know that using DOS batch is kind of retro approach and we also have Powershell but this time I decided to go with minimalistic set of tools. In the end we have working solution for cases when exporting data-tier application and uploading it to cloud is enough for us.

Comments are closed.