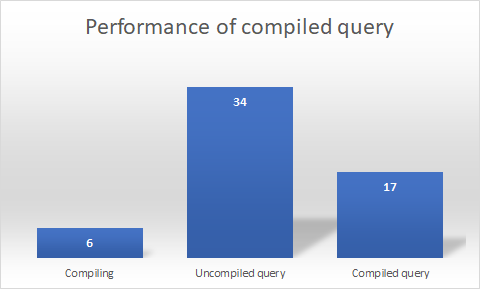

Performance of compiled queries in Entity Framework Core 2.0

Before applying any optimizations to our code we have to ask one question: what is the cost of improvement and is it really improvement? Compiled queries in Entity Framework 2.0 are categorized as high-availability feature but before making any decisions we need to know what is the actual win. This blog post introduces the measurements I made with simple database context to compare compiled and uncompiled queries in Entity Framework Core 2.0.

What was exactly measured?

To avoid confusion I give some explanations before going to measurements. Compiled queries in Entity Framework Core are LINQ queries that are compiled in application or library to be sent to database server. From database server view point it is the client-side feature and it is not related to precompiling views and SQL commands in database server. This is why I used in-memory data provider as measuring with real database will pollute results with all kind of database server specifics we cannot easily remove from results.

Test database context

I’m using simple database context with in-memory provider to have minimum overhead. This way I also avoid using real database that makes it harder to understand what part of performance raise came from code and what came by database optimizing execution plans and caching data. Database context knows only one entity type called Category.

public class Category

{

public Guid Id { get; set; }

public string Name { get; set; }

public Category Parent { get; set; }

}

Additionally it has compiled query to get first top-level category and methods to get top-level category with and without compiled query. Here is my database context.

public class TestDbContext : DbContext

{

private static Func<TestDbContext, Category> _getCategoryCompiled =

EF.CompileQuery((TestDbContext ctx) =>

ctx.Categories.Include(c => c.Parent)

.Where(c => c.Parent == null)

.OrderBy(c => c.Name)

.FirstOrDefault());

public TestDbContext(DbContextOptions<TestDbContext> options) : base(options)

{

}

public DbSet<Category> Categories { get; set; }

public void FillCategories()

{

var foodCategory = new Category { Id = Guid.NewGuid(), Name = "Food", Parent = null };

Categories.AddRange(

foodCategory,

new Category { Id = Guid.NewGuid(), Name = "Drinks", Parent = null },

new Category { Id = Guid.NewGuid(), Name = "Clothing", Parent = null },

new Category { Id = Guid.NewGuid(), Name = "Electronis", Parent = null }

);

for(var i = 0; i < 50; i++)

{

Categories.Add(new Category { Id = Guid.NewGuid(), Name = "Random", Parent = foodCategory });

}

SaveChanges(true);

}

public Category GetTopLevelCategory()

{

return Categories.Include(c => c.Parent)

.Where(c => c.Parent == null)

.OrderBy(c => c.Name)

.FirstOrDefault();

}

public Category GetTopLevelCategoryCompiled()

{

return _getCategoryCompiled(this);

}

}

With this dummy database context I made simple measurements.

Measurement methodics

I am sorry I did no scientific level measuring but went the easy way. I decided to run enough cycles to bring out differences we can sense and understand. I saw some results in Christos Matskas blog post Improving EF Core performance with Compiled Queries but there compiled queries made not much difference as loops were actually pretty short. I decided to go way longer loops – 100K cycles to see the difference. And I can tell – there is big difference.

I made three different measurements:

- Compiling query

- Querying for top level category

- Querying for top level category with compiled query

I ran all these measurements in loops with length of 100K cycles and I ran measurements multiple times. All times I got almost the same results.

Using Stopwatch class I measured how much time it takes for each of these three measurements to run 100K times.

Compiling the query

First I ran query compiling to see what is the cost of this action. Using small number of cycles we don’t see any difference. But 100K cycles was different.

private static void MeasureCompiling()

{

var watch = new Stopwatch();

var cycles = 100000;

watch.Start();

for (var i = 0; i < cycles; i++)

{

EF.CompileQuery((TestDbContext ctx) =>

ctx.Categories.Include(c => c.Parent)

.Where(c => c.Parent == null)

.OrderBy(c => c.Name)

.FirstOrDefault());

}

watch.Stop();

Console.Write("Compiling: ");

Console.WriteLine(watch.Elapsed);

}

The result on my machine is 6 sec in average.

Measuring uncompiled query

I did next 100K rounds with GetTopLevelCategory() method of test database context. This method queries the data source and every time the query is compiled again.

private static void MeasureUncompiledQuery()

{

var options = new DbContextOptionsBuilder<TestDbContext>()

.UseInMemoryDatabase(Guid.NewGuid().ToString())

.Options;

var context = new TestDbContext(options);

var watch = new Stopwatch();

var cycles = 100000;

context.FillCategories();

watch.Start();

for (var i = 0; i < cycles; i++)

{

context.GetTopLevelCategory();

}

watch.Stop();

Console.Write("Uncompiled query: ");

Console.WriteLine(watch.Elapsed);

}

100K queries on my machine too ~34 sec in average.

Measuring compiled query

It’s time to see if compiled query makes any difference or not. For this I ran GetTopLevelCategoryCompiled() method of test database context 100K times.

private static void MeasureCompiledQuery()

{

var options = new DbContextOptionsBuilder<TestDbContext>()

.UseInMemoryDatabase(Guid.NewGuid().ToString())

.Options;

var context = new TestDbContext(options);

var watch = new Stopwatch();

var cycles = 100000;

context.FillCategories();

watch.Start();

for (var i = 0; i < cycles; i++)

{

var top = context.GetTopLevelCategoryPrecompiled();

}

watch.Stop();

Console.Write("Compiled query: ");

Console.WriteLine(watch.Elapsed);

}

100K rounds with compiled query on my machine gave way better result – ~17 sec.

I was able to get the same results on all runs of measurements. There were only small fluctuations (±800ms) but not something big like 5 seconds or something like this.

Simple calculation shows that we can achieve up to 50% in performance with compiled queries.

NB! Don’t take these numbers as absolute truth. The actual performance gain may be totally different .- be it higher or lower – depending on concrete application and database. The numbers given here originate from one simple set of tests and these doesn’t give any insights about overall effect on system when using compiled queries.

Wrapping up

When just running few methods in test application we don’t notice any difference between compiled and uncompiled queries. But running those queries in loop 100K times was enough on my machine to bring out better the differences in running times. After these experiments we probably understand why compiled queries are part of high-availability section in Entity Framework Core documentation. Although compiled queries are powerful way to avoid repeating work in application when it runs we cannot yet make all queries use it – currently all queries returning more than one result (IEnumerable<T>, IQueryable<T>) are not supported. Hopefully these queries will be supported in future versions of Entity Framework Core.

Compiled query with in memory database is not a relevant benchmark. In memory queries have a different execution pipeline compared to ones hitting the database.

You should really test by hitting a database.

I’m sorry to say it but you didn’t got the idea quite right. The idea was to measure the effect of compiled queries alone. Using real database will pollute the measurements as it introduces many database specific details and this is something we want to avoid when measuring one specific feature of database client.

Gunar,

When using InMemory database, materialization pipeline is not used, SQL generation is not used either. Which means this benchark does not test what your are actually interested in, you’re only testing a thin layer or EF, the surface, not the whole cake. If you are interested in how much better it performs when accesing the DB, then the gains are not trasferable. Also, first request and subsequent request benchmarking is very important, EF does cahing internally in both compiled and uncompiled queries.

Thanks for detailed reply, Cata. I really appreciate it. I think I found a way how to run compiling for MSSQL the way that database doesn’t get involved. I make some experiments and if I succeed I will post the results as update to this post.