Optimized hierarchy traverser

My first draft of hierarchy traversing component got some serious feedback and it’s time to make some changes before moving on to next challenges. Hierarchy traverser is not optimal yet as it uses tail-call recursion and it’s easy to run to stack overflow with it. This blog post solves this problem and prepares for next challenges like node cache and continue-from-given-node.

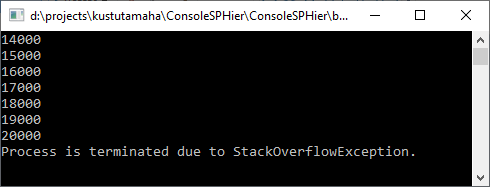

Problem: running to stack overflow

As reader Ants pointed out then .NET runtime doesn’t always optimize tail-call recursion and using this simple piece of code it’s possible to run to stack overflow fast.

Let’s define hierarchy provider of integer.

class IntHeirarchyProvider : IHierarchyProvider<int>

{

public int MaxDepth { get; private set; }

public IntHeirarchyProvider(int maxDepth) => MaxDepth = maxDepth;

public bool HasRootNode => true;

public bool IsHierarchyNode(int node) => true;

public IList<int> LoadChildren(int node) => node < MaxDepth ? new List<int>() { node + 1 } : null;

public int LoadRootNode() => 1;

}

Now let’s run it using following code going to depth of 100K.

static void Main(string[] args)

{

var traverser = new HierarchyTraverser<int>(new IntHeirarchyProvider(100000));

traverser.NodeLoaded += (s, e) => { if (e.Node % 1000 == 0) Console.WriteLine(e.Node); };

traverser.Start();

}

Here’s the resulting StackOverflowExcpetion.

Although in practice we don’t meet document repositories or site menus with such a depth I want my solution to be ready also for other types of applications where such needs may raise.

Introducing wrapper

I solved problem by moving from recursion to stack. It solves the problem with stack overflow but the price is class that wraps hierarchy nodes. When using stack we cannot use anymore one simple list to keep hierarchy path of current node. We have to keep this information somewhere else and the most logical choice for me was wrapper class.

public class NodeWrapper<T>

{

public NodeWrapper<T> Parent { get; set; }

public T Node { get; set; }

public int Level { get; set; }

}

Wrapper has some additional properties we need usually when migrating document repositories:

- Parent – when migrating document repository then very often in legacy systems we have to know at least one or two parents of current node. One example is forming valid number for document folder.

- Level – or current depth is sometimes used to recognize the type of document folder in repository.

This little change actually affects other parts of code a lot as we see with next steps.

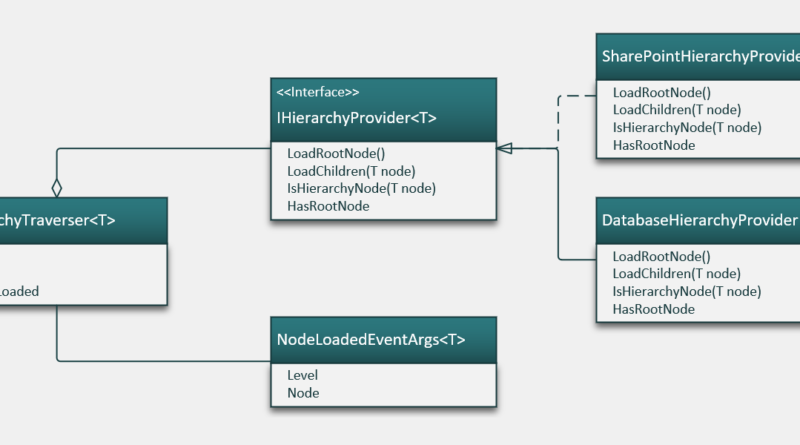

Better hierarchy provider

To make code more logical I also introduced – based on comments – root nodes collection. It means that there is no more need for checks if hierarchy has one single root node or not.

public interface IHierarchyProvider<T>

{

IList<T> LoadRootNodes();

IList<T> LoadChildren(T node);

}

As wrapper nodes hold reference to parent and level I also changed event arguments class to use node wrapper.

public class NodeLoadedEventArgs<T>

{

public NodeWrapper<T> Node { get; set; }

}

Optimizing hierachy traverser

Now we have all changes done and it’s time to write more effective hierarchy traverser. Instead of recursion it uses stack and single loop to traverse through hierarchies. Here is the optimized version of previous hierarchy traverser.

public class HierarchyTraverser<T>

{

public delegate void NodeLoadedEventHandler(object sender, NodeLoadedEventArgs<T> e);

public event NodeLoadedEventHandler NodeLoaded;

private readonly IHierarchyProvider<T> _provider;

private bool _stop = false;

public HierarchyTraverser(IHierarchyProvider<T> provider)

{

_provider = provider;

}

public void Start()

{

var rootNodes = _provider.LoadRootNodes();

var stack = new Stack<NodeWrapper<T>>();

foreach (var rootNode in rootNodes)

{

stack.Push(new NodeWrapper<T> { Node = rootNode, Level = 0, Parent = null });

}

while (stack.Count > 0)

{

var node = stack.Pop();

OnLoadNode(node);

var children = _provider.LoadChildren(node.Node);

if (children == null)

{

continue;

}

foreach (var childNode in children)

{

if (_stop)

{

break;

}

stack.Push(new NodeWrapper<T>

{

Node = childNode,

Level = node.Level + 1,

Parent = node

});

}

}

}

public void Stop()

{

_stop = true;

}

protected void OnLoadNode(NodeWrapper<T> node)

{

var args = new NodeLoadedEventArgs<T>

{

Node = node

};

NodeLoaded?.Invoke(this, args);

}

}

Let’s try out how these modifications work.

Hierarchy traverser can be written shorter using LINQ and lambdas but at this point I leave it like it is as. There’s more code, yes, but it is not too cryptic for developers who are not familiar with hierarchies or who are new on the field. When I get to API layer I will probably go here with shorter code.

Trying out modified hierarchy traverser

My main concern is stack overflow, of course. It should not happen with new implementation but still let’s try out to be sure. As many things changed in code I had to update integer hierarchy provider to reflect these changes.

class IntHeirarchyProvider : IHierarchyProvider<int>

{

public int MaxDepth { get; private set; }

public IntHeirarchyProvider(int maxDepth) => MaxDepth = maxDepth;

public bool HasRootNode => true;

public bool IsHierarchyNode(int node) => true;

public IList<int> LoadChildren(int node) => node < MaxDepth ? new List<int>() { node + 1 } : null;

public IList<int> LoadRootNodes() => new List<int> { 1 };

}

Test code needs small modifications before we can run it.

static void Main(string[] args)

{

var traverser = new HierarchyTraverser<int>(new IntHeirarchyProvider(100000));

traverser.NodeLoaded += (s, e) => { if (e.Node.Node % 1000 == 0) Console.WriteLine(e.Node.Level + 1); };

traverser.Start();

}

Now we don’t run to stack overflow anymore.

Updated SharePoint hierarchy provider

Here is updated SharePoint hierarchy provider.

public class SPHierarchyProvider : IHierarchyProvider<SPFolder>, IDisposable

{

private SPContentTypeId FolderContentTypeId = new SPContentTypeId("0x0120");

private SPSite _site;

private SPWeb _web;

private SPList _list;

public SPHierarchyProvider(string siteUrl, string webUrl, string listName)

{

_site = new SPSite(siteUrl);

_web = _site.OpenWeb(webUrl);

_list = _web.Lists[listName];

}

public IList<SPFolder> LoadChildren(SPFolder node)

{

return node.SubFolders.Cast<SPFolder>().ToList();

}

public IList<SPFolder> LoadRootNodes()

{

return new List<SPFolder> { _list.RootFolder };

}

#region IDisposable Support

private bool disposedValue = false;

protected virtual void Dispose(bool disposing)

{

if (!disposedValue)

{

if (disposing)

{

_web.Dispose();

_site.Dispose();

}

disposedValue = true;

}

}

public void Dispose()

{

Dispose(true);

}

#endregion

}

For this provider parent node cache is mandatory as asking parent folder of SPFolder makes round-trip to database and when exporting big document repositories this one round-trip makes huge difference.

Updated database hierarchy provider

Also hierarchy provider for simple SQL Server database needed updates after changes we made. Updated version of provider is here.

public class DatabaseHierarchyProvider : IHierarchyProvider<DataRow>

{

private readonly IDbConnection _connection;

public DatabaseHierarchyProvider(IDbConnection connection)

{

_connection = connection;

}

public IList<DataRow> LoadChildren(DataRow node)

{

using(var command = GetCommand(node))

{

var table = new DataTable();

table.Load(command.ExecuteReader());

return table.Rows.Cast<DataRow>().ToList();

}

}

private IDbCommand GetCommand(DataRow node)

{

if (node == null)

{

return GetRootItemsCommand();

}

return GetChildItemsCommand((int)node["Id"]);

}

private IDbCommand GetRootItemsCommand()

{

var sql = "SELECT * FROM Menu WHERE ParentId IS NULL";

var command = _connection.CreateCommand();

command.CommandText = sql;

return command;

}

private IDbCommand GetChildItemsCommand(int id)

{

var sql = "SELECT * FROM Menu WHERE ParentId = @Id";

var command = _connection.CreateCommand();

command.CommandText = sql;

var param = command.CreateParameter();

param.ParameterName = "@Id";

param.Value = id;

command.Parameters.Add(param);

return command;

}

public IList<DataRow> LoadRootNodes()

{

using (var command = GetCommand(null))

{

var table = new DataTable();

table.Load(command.ExecuteReader());

return table.Rows.Cast<DataRow>().ToList();

}

}

}

Wrapping up

Solving one simple problem may introduce many changes to codebase but if architecture or technical design of system gets better then these changes are always justified. ANd most important is to move towards bullet-proof code. This post introduced how to move from tail-recursion to stack and avoid stack overflow exception that was very easy to produce. The resulting components now have more power on working with hierarchies

Excellent!

I forgot mention that if the order of the nodes matters, then the calls to LoadRootNodes() and LoadChildren() should reverse the order of the results prior to pushing on the stack so that the nodes will eventually be processed in the original order when the event handler is called.

That’s the good point. If order is not exactly like it is in data store then it may cause problems. First victims will probably be those who write something for persistence layer (menus, breadcrumbs, category trees etc). I’m not sure yet if order of folders can affect direct migrations from one store to another where relations like follow-ups are immediately imported with document.

Anyway, I will use original ordering in code I’m writing for next post. Thanks for pointing to this issue.

It’s why in my gist I had the Reverse() call for the Order() method my depth first implementation, while my breadth first implementation just passes things through.

https://gist.github.com/azureskydiver/273b053d7e43c8de19e0658c35f0eae1

Reverse is good material to technical flame wars :) Depending on approach it creates new collection or changes the state of existing one. I think as far as I stay with enumerables that have Count property I can go with for-loop. Then there will be no copies and no state changes to original collection. Right now I don’t have any good example to take from practice when Reverse() can grow too expensive. But it’s thing to consider.

I think without using `SQL Server’s Common Table Expressions` there will be a lot of round trips to the db to have a hierarchy traverser.

I leave decision about CTE-s and other implementation specific details to developers who build hierarchy providers. Sure, they can load whole hierarchy from table(s) with one shot using CTE and select child nodes from DataTable they caches. But this is implementation detail.

The .NET Framework implementation the LINQ Reverse() extension method creates a new collection:

https://referencesource.microsoft.com/#System.Core/System/Linq/Enumerable.cs,3af306c560f8c669,references

As long as you are using reference types, this shouldn’t be too expensive unless the original data is particularly huge. Only references to the original objects are stored in the new collection. Creating this new collection shouldn’t affect the state of the original collection, or the objects within the collection. (That is assuming that your original collection is not dependent on side-effects.)

Now, if you were using value types, then yes, calling Reverse() can become very expensive very quickly.

Value types come probably in when somebody wants to apply this construct to do some math. Fibonacci sequence, by example. On business applications and integration side I don’t believe there will be IHierarchyProvider in use because there is always some kind of DTO or other object that is carrying information. I think going with reverse should be safe. Until somebody asks in comments to optimize it for integers or doubles :)

This is neat thanks for posting, is this on github? Id love to see it accompanied by some example code and tests.

Tory, it’s in Github now: https://github.com/gpeipman/HierarchyTraverser I will add examples over coming days.